How accurate is sampled Google Analytics data? A simple experiment to find out

If you work with large volumes of data in Google Analytics, you have likely encountered sampled data at some point.

Seeing that your data has been sampled might make you suspicious – can I really trust the data if it is sampled at 50%? What about only 5%?

To get to the bottom of this, I did a simple experiment to see how accurate sampled data is.

What is sampling?

Google Analytics uses sampling to speed up the time it takes to a run a report.

For free Google Analytics users, the threshold where sampling begins is 500,000 sessions (for Analytics 360 users, it is 100 million).

So assuming you’re a free user, if you are querying a date range where there were 1 million sessions, Google will select a random sample of 50% of the sessions during that time and make an inference about the total values.

But you will not encounter sampling on every report.

For example, default reports are not subject to sampling. An example of this is using Page as the primary dimension and Pageviews as the metric.

However, if you run an ad hoc report, you will incur sampling. An example of this – which I used for my experiment – would be if you use Page as the primary dimension, Default Channel Grouping as the secondary dimension, and Pageviews as the metric.

An important note here is adding a secondary dimension does not always trigger sampling. For example, in the first scenario, if you used Page as the primary dimension, Month of Year as the secondary dimension, and Pageviews as the metric, you would not be subject to sampling because Month of Year is part of the default report.

To find the sampling rate for your report, you can see the number by hovering over the shield icon next to the report title if you are running the report within the Google Analytics platform. If you’re using the API, Google has instructions for finding this number.

Setting up the experiment

For this experiment, I picked a report I knew would trigger sampling.

I wanted to look in depth at a single high traffic page to see where the traffic was coming from. So I used Page as the primary dimension, Default Channel Grouping as the secondary dimension, and Pageviews as the metric.

I created a spreadsheet with 6 columns: Channel, date range, sampling rate, sampled pageviews, actual pageviews and then a field that calculated the percentage difference between the actual and sampled pageview counts.

For each Channel, e.g., Organic Search, I ran a sampled report at various time intervals that would trigger different sampling levels. I used 5%, 10%, 25%, 33%, 50%, 66%, 75%, 100%.

To capture the actual pageviews, I then ran a series of reports in smaller time increments that fell under the sampling threshold and then summed the numbers.

Note: if you are going to try out this experiment, I’d recommend using the Google Analytics API or downloading data as a CSV so you can merge the files using PowerShell or Command Prompt. This will save a lot of time of copying and pasting.

The results

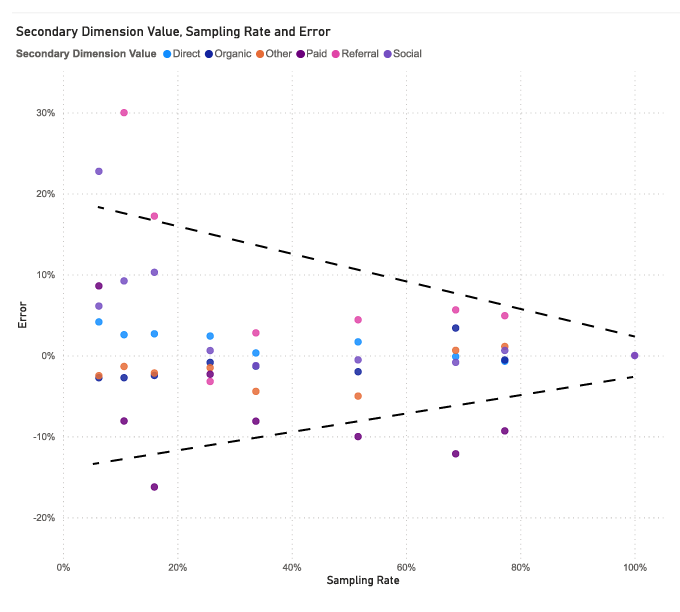

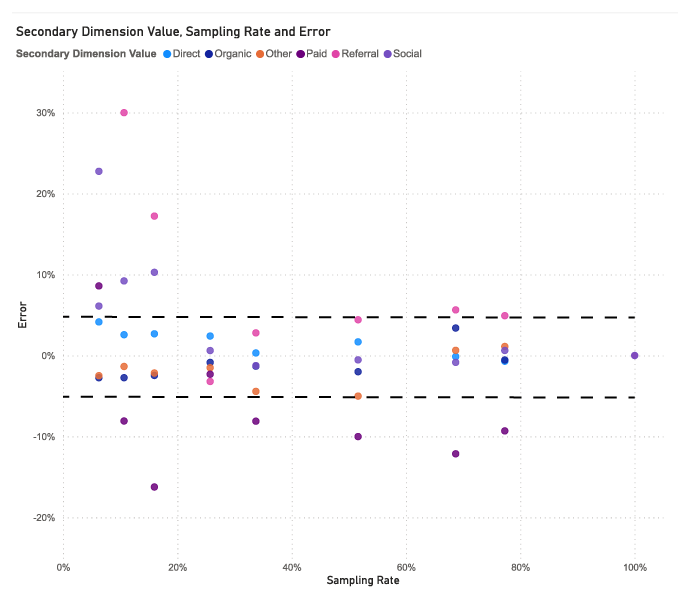

The chart below shows the error rate for each channel at different sampling rates. As expected, accuracy improves with the sampling size (the dotted trend lines are approximate).

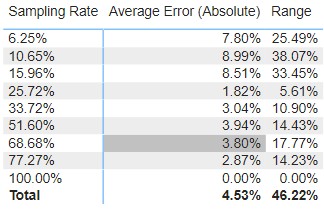

Some data is under-reported and some is over-reported, so to adjust for this, I also created a table showing the average (absolute) value of the error, along with the range of the errors.

As you see from the table, even at 25% sampling, we still see somewhat accurate data. The average error is only about 2%. It is not until sampling gets below 25% that error rates really take off.

So how do we interpret these numbers?

Part of it depends on your tolerance for uncertainty. In a survey, an ideal margin of error might be less than 5%. So in this experiment, many values were within plus or minus 5%, but not all were.

Part of it also depends on the values themselves and what type of analysis you are doing. If paid search and display advertising each account for 15% of your traffic and you are trying to determine which one is driving more traffic, you can’t definitively say with a +/- 5% error.

But if you reframe your analysis you may be able to more safely generalize within that same margin of error. For example, using the above hypothetical scenario, if paid digital advertising represents 30% of traffic (15% display + 15% paid search) and organic represents 60%, you can safely say organic traffic is higher at a +/- 5% error.

Closing thoughts

So, how accurate is sampled Google Analytics? The short answer is it depends. If your report is a long list of blog articles and it is sampled at 5%, it may not be very meaningful. If your report is 10 Sources sampled at 50%, you may be able to draw some safe conclusions.

Unfortunately, sampling may be unavoidable based the size of your query. If you are really concerned about the validity of your data, there are a couple options:

- Upgrade to Analytics 360 (it’s expensive!)

- Find other analytics software that doesn’t sample

- MacGyver a workaround that runs multiple Google Analytics queries and combines them